Torch is not able to use GPU – Complete Guide – 2024

Many people who like deep learning sometimes find it hard to make Torch, a framework that many people use, work well with powerful GPUs.

Ensure you have the CUDA toolkit installed, compatible GPU drivers, and the PyTorch version that supports GPU. Verify device availability with ‘torch.cuda.is_available() ‘and set tensors to GPU using .to(‘cuda’).

In this article, we’ll look at why this happens, what you can do to fix it, other ways to solve the problem, and what might happen in the future with Torch and GPUs.

Understanding Torch:

1. Torch Framework Overview:

Torch, an open-source machine learning library, provides a seamless environment for building and training neural networks. Its modular structure and dynamic computation graph set it apart. But what happens when this robust framework encounters difficulties in harnessing the power of the GPU?

2. Torch and GPU Integration:

The integration of Torch with GPUs is crucial for accelerating the training of complex models. The parallel processing capabilities of GPUs significantly enhance the speed of computations, making them indispensable for deep learning tasks.

3. Common Issues Faced:

Users often report encountering issues where Torch fails to recognize or utilize the GPU resources. This can lead to prolonged model training times and suboptimal performance.

Reasons behind Torch’s GPU Issue

1. Compatibility issues

Torch’s GPU-related problems often stem from compatibility issues between the framework and GPU drivers. Ensuring that the versions align is crucial for seamless integration.

2. Driver conflicts

Outdated or incompatible GPU drivers can cause conflicts with Torch, leading to the inability to utilize GPU resources effectively. Regularly updating drivers is essential to avoid such issues.

3. Configuration errors

Misconfigurations in Torch settings or incomplete installations can also contribute to the GPU utilization problem. Thoroughly checking and adjusting the configuration is a key troubleshooting step.

Troubleshooting Steps

1. Verifying GPU compatibility

Users encountering Torch GPU issues should first confirm that their GPU is compatible with the framework. Checking the official documentation and community forums can provide insights into supported GPU models.

2. Updating drivers

Keeping GPU drivers up-to-date is crucial for optimal performance. Users should regularly check for updates from the GPU manufacturer’s website and install the latest compatible drivers.

3. Adjusting configuration settings

Reviewing and modifying Torch’s configuration settings can resolve GPU-related issues. Ensuring that the framework is correctly configured to recognize and utilize the available GPU resources is essential.

The Future of Torch and GPU Integration

1. Community updates and contributions

The Torch community actively works on addressing issues and improving the framework. Regular updates and contributions from users worldwide contribute to the continuous development of Torch, including enhancements in GPU integration.

2. Framework development roadmap

Reviewing Torch’s development roadmap can provide insights into planned features and improvements related to GPU integration. Developers should stay informed about upcoming releases to benefit from advancements in GPU support.

3. Potential solutions on the horizon

The Torch development team may be actively working on solutions to the GPU issue. Keeping an eye on official announcements and release notes can reveal potential fixes or workarounds for users experiencing GPU-related challenges.

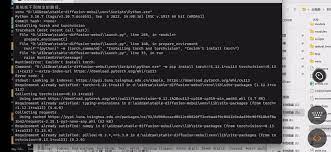

How to Solve the Stable Diffusion Torch Is Unable To Use GPU Issue?

To resolve the “Stable Diffusion Torch Unable to Use GPU” issue, ensure CUDA toolkit and GPU drivers are installed, use a PyTorch version with GPU support, and check device availability.

Does Torch Support GPU?

Yes, PyTorch, often referred to as “Torch,” supports GPU acceleration. Ensure you have the CUDA toolkit and compatible GPU drivers installed to leverage GPU capabilities in PyTorch.

Torch is Unable to Use GPU?

If Torch is unable to use the GPU, ensure that you have the CUDA toolkit and compatible GPU drivers installed. Verify GPU availability with ‘torch.cuda.is_available() ‘and set tensors to GPU using ‘.to(‘cuda’)’.

Torch can’t use GPU, but it could before?

If Torch can’t use the GPU when it could before, check for updates in the CUDA toolkit, GPU drivers, and PyTorch version. Ensure compatibility and reinstall if necessary to restore GPU functionality.

How to solve “Torch is not able to use GPU” error?

To resolve the “Torch is not able to use GPU” error, ensure CUDA toolkit and compatible GPU drivers are installed. Check PyTorch version for GPU support, and verify GPU availability using ‘torch.cuda.is_available()’.

GPU is not available for Pytorch?

If the GPU is not available for PyTorch, ensure that you have installed the CUDA toolkit and compatible GPU drivers. Verify GPU availability using ‘torch.cuda.is_available()’ and troubleshoot any installation issues.

Tips for Efficient GPU Usage in Torch

1. Best practices for GPU integration

Implementing best practices for GPU integration ensures smooth collaboration between Torch and GPU resources. This includes optimizing code, utilizing GPU-specific functions, and following recommended guidelines.

2. Optimizing Torch code for GPU

Developers can enhance Torch code to maximize GPU utilization. This involves structuring code to leverage parallel processing capabilities and adopting practices that align with GPU architecture.

3. Ensuring smooth performance with GPU acceleration

Regularly monitoring and fine-tuning GPU performance in Torch is essential. Users should be proactive in addressing any emerging issues to maintain optimal efficiency during deep learning tasks.

Faqs:

1. Error “Torch is not able to use GPU” when installing Stable Diffusion WebUI?

If encountering the error “Torch is not able to use GPU” during Stable Diffusion WebUI installation, ensure CUDA toolkit and compatible GPU drivers are installed. Verify GPU availability using ‘torch.cuda.is_available()’.

2. Skip-torch-cuda-test to COMMANDLINE_ARGS variable to disable this check?

To disable the Torch CUDA test, add “Skip-torch-cuda-test” to the COMMANDLINE_ARGS variable. This skips the GPU check during the command execution, allowing you to proceed without CUDA testing.

3. Installing Stable diffusion webui : Torch is not able to use GPU?

When installing Stable Diffusion WebUI and encountering the error “Torch is not able to use GPU,” ensure CUDA toolkit and compatible GPU drivers are installed. Verify GPU availability using ‘torch.cuda.is_available()’and troubleshoot any installation issues.

4. Is Torch compatible with all GPU models?

Torch has a list of supported GPU models, and users should check the official documentation for compatibility. Incompatibility issues can arise with older or less common GPU models.

5. What should I do if updating GPU drivers doesn’t solve the issue?

If updating drivers doesn’t resolve the Torch GPU problem, consider checking for configuration errors, exploring alternative deep-learning frameworks, or seeking assistance from the Torch community.

6. Can using cloud-based GPU services be a long-term solution?

Cloud-based GPU services can serve as a reliable alternative for users facing persistent Torch GPU issues. However, long-term feasibility depends on factors like cost, convenience, and specific project requirements.

7. How frequently does Torch release updates for GPU support?

Torch releases updates regularly, and GPU support improvements may be included in these updates. Users are encouraged to stay informed about the framework’s development roadmap for upcoming features.

8. Are there any best practices for optimizing Torch code for GPU?

Yes, optimizing Torch code for GPU involves structuring code for parallel processing, using GPU-specific functions, and adhering to recommended practices. Following best practices enhances overall GPU performance in Torch.

Conclusion:

In conclusion, addressing Torch’s GPU issues involves verifying compatibility, updating GPU drivers, and adjusting configuration settings. The article emphasizes the importance of staying informed about community updates, development roadmaps, and potential solutions. It also provides troubleshooting steps and tips for efficient GPU usage in Torch, ensuring users can harness the power of GPUs seamlessly for enhanced deep learning performance.